Easy vs. hard predictions around AI investments

Ever since the launch of our Berenberg Global Focus Fund, we have been invested in companies with strong capabilities throughout the AI value chain. Over the last 18 months, however, AI as an investment thesis has gained more popularity. The launch of ChatGPT in November 2022 has propelled AI into the spotlight, becoming a major topic of discussion both in the investment world and across global media. In this paper, we therefore aim to share some of our perspectives on this field.

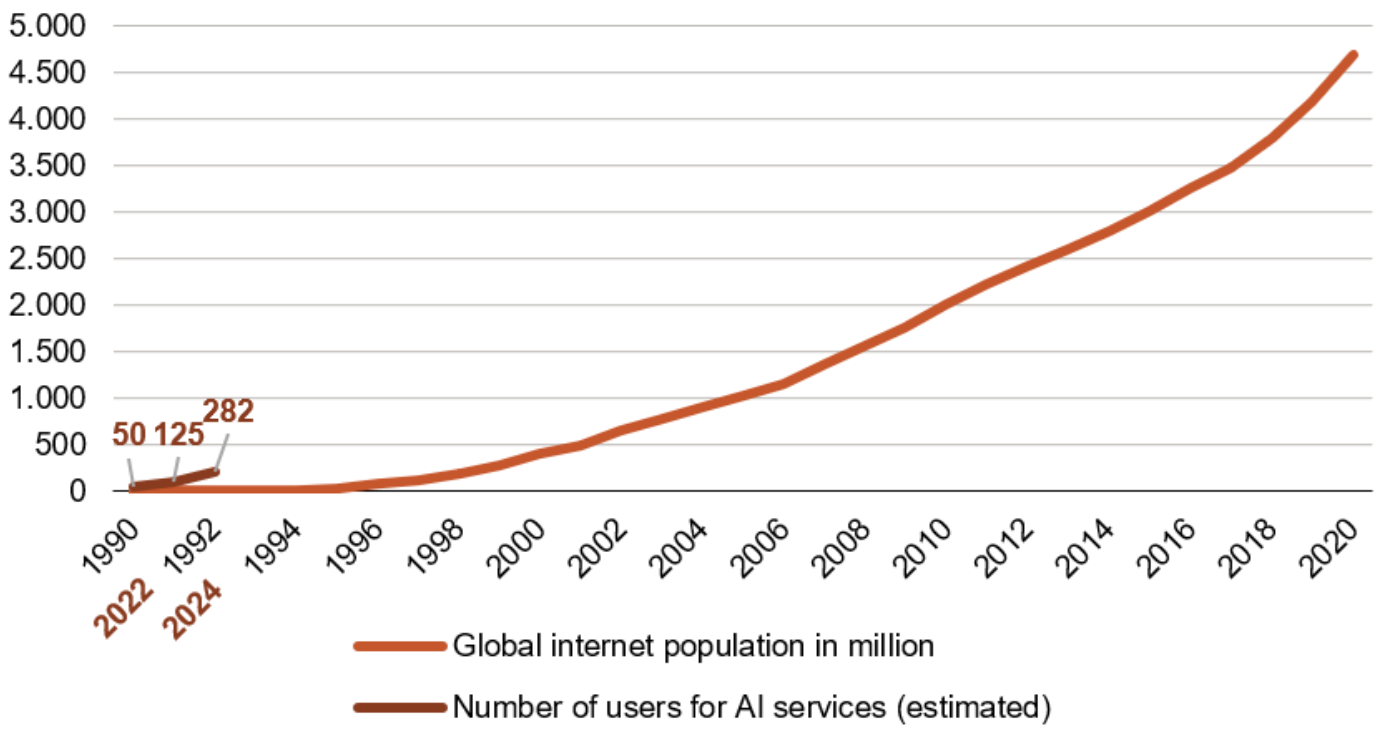

AI has the potential to have a transformative impact on industries, business models and our daily work. For example, as model capabilities improve and get more tailored to specific use cases, users will likely experience significant productivity gains among knowledge workers. In particular, developers using tools like GitHub’s Copilot or Google’s Gemini have already demonstrated significant productivity improvements for users. Drawing a parallel to the Internet, it also becomes evident that we are still at an early stage of AI adoption. It took the Internet 27 years to achieve a market penetration of 70% with more than 5 billion users today. While ChatGPT has already c200m users, the number of paying subscriptions remains much lower at just a few millions. Looking at enterprise AI use cases, Microsoft Copilots were one of the first GenAI products and we estimate there were fewer than 7m paying user licenses at the end of the last quarter compared to over 1.2bn Microsoft Office users globally. It's important to note that the adoption of AI services has been much steeper compared to the internet and there is also still a long runway of growth in terms of AI use cases as well as adoption of paid AI services.

Figure 1: There are a few hundred million users of AI services compared to an internet population of over 5 billion

Analysts have made various attempts to estimate the revenue-generating potential of GenAI services. Microsoft is probably one of the largest AI revenue generators, accounting for 7% of Azure growth or $4 billion annualized last quarter. Being less than one year into the Copilot adoption cycle, Microsoft mentioned that Copilot has experienced one of the fastest product uptakes surpassing similar product launches such as Microsoft’s Security Solution E5. There are also estimates that Copilots could achieve this growth with attractive gross margins of 70%. However, there have also been critical voices regarding upfront capital investments and the ability to generate revenue, such as Sequoia's study, "AI's $600B Question". The latter underlines the need to be cautious about oversupply in the market and the importance to distinguish, from a product perspective, between those companies that can bring applications and services with high demand to the market vs those that cannot. The most recent earnings season with various cross currents in tech subsectors has been a cautious sign. Above all, we believe the AI race will remain hot as we are already seeing a winner-takes-all scenario or at least the dominance of numerous vertical AI applications and products.

Three phases that could define the current AI wave

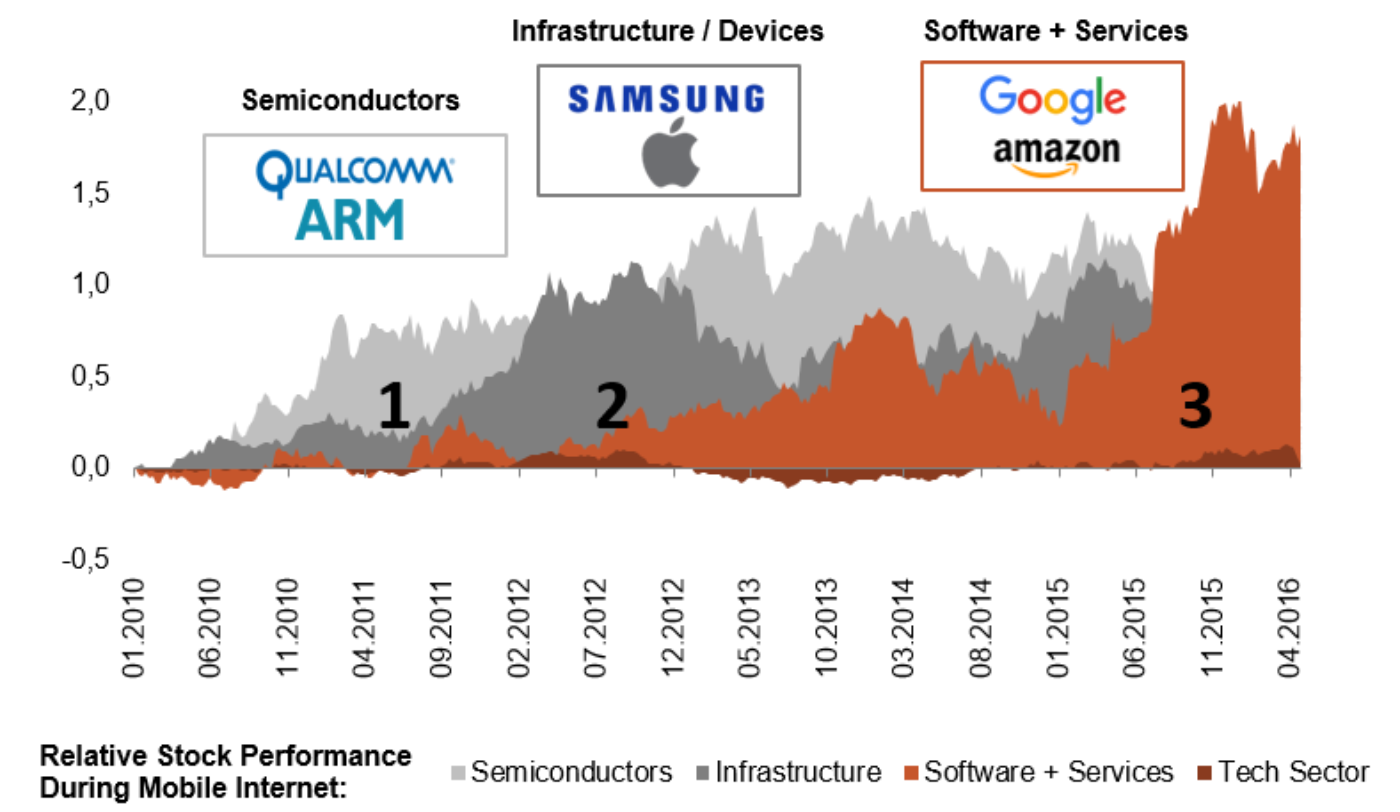

We are currently firmly in the first phase of the AI journey, characterized by datacenter upgrades to enable accelerated compute, new storage solutions and advanced networking architectures. So far, tech hardware companies have received a strong boost from US cloud service providers spending and the comeback of enterprise IT spending has just started. The second phase, which we are currently observing is defined by infrastructure being built out, mainly by the cloud service providers, to offer the tooling and services for enterprise customers. These customers are either using pre-defined AI applications or they are fine-tuning models using their own data. The third stage will likely see a vast amount of new AI applications for enterprise and consumers. In principle, these three phases are similar to those we observed during the advent of the mobile Internet, whereby the share prices of the players involved experienced different trends (see Figure 2).

Figure 2: Mobile era monetization cycle

Phase one: Changing the datacenter architecture

The cornerstone of utilizing advanced AI models lies in a modern data centre infrastructure. In this context, graphics processing units (GPUs) have emerged as the workhorse of AI and ML computations, given the high degree to which these chips can leverage the power of parallel instead of serial data processing. Traditionally relegated to graphics rendering, GPUs have found a new purpose in accelerating complex mathematical calculations inherent in AI algorithms. This shift has prompted data centres to reevaluate their hardware configurations in terms of processing power, but also networking and storage requirements.

In 2024, Amazon, Microsoft, Alphabet and Meta Platforms are expected to invest a combined $174 billion¹ in the build-out of their data center infrastructure, an increase of 49% year-over-year. A significant portion of this investment will be spent on the latest generation of high-performance GPUs and other accelerator chips.

While Nvidia is currently close to a monopoly-like position in server GPUs, competitive pressure from hardware alternatives as well as fragmentation of workload use cases away from training workloads is likely to increase in the medium term. All three major cloud providers have announced their own in-house programs to develop custom silicon, with Google’s TPU for AI and machine learning currently appearing to be the most competitive offering. Marvell Technology is one of a few companies providing design and IP services to all major cloud service providers and is benefitting from the networking upgrades within AI datacenters.

In addition, the strong demand for high-performance chips is impacting various players across the semi supply chain, ranging from wafer production to chip designers, manufacturers, test equipment, networking, and storage. ASML is a prime examples from our portfolio with a leading market position that is benefiting from this trend.

Phase two: Building the infrastructure

Following the build-out of the hardware infrastructure, the next phase focuses on offering the necessary tools and services for the customization and fine-tuning of large language models (LLMs). Given the high capital needs for the infrastructure investments, large players benefit from economies of scale during the build out phase. Today enterprises find it easier to start their internal AI journey via tapping into hyperscale offerings vs. trying to build infrastructure by themselves. In this context, major US cloud players are well-positioned to become one-stop shops for AI services, providing access to the relevant hardware, talent, LLMs and developer tools.

Microsoft is currently best positioned in this regard, boasting the strongest AI growth rates among cloud services providers. It provides a full suite of AI-powered productivity work solutions called Copilot. Azure AI serves as an end-to-end platform for data scientist and app developers to build own custom AI experiences based on both Microsoft and third-party models. Additionally, Microsoft is at the forefront of edge AI with newly launched Copilot-powered laptops.

Another example to illustrate this point is AWS, which currently offers a broad range of AI services along with historically grown strength in the analysis of unstructured data. Via Bedrock, AWS customers can access LLMs from various third-party providerssuch as Anthropic, Stability AI, Cohere or AI21 and customize the respective models using their own data. In this way, Amazon intends to act as an overarching platform for LLMs, combining access to leading third-party models with corresponding hardware and services.

Beyond technical infrastructure, there will also be a need to upgrade physical infrastructure such as electrical equipment, power stations and energy generation. While we acknowledge the growth opportunities and possibly strong demand, companies in that part of the market operate in a rather commoditized area of the value chain due to lower barriers of entry and limited pricing power.

Phase three: Applications across vertical AI and consumer experiences

We identify two main AI use cases that bring tangible value to enterprise customers. First, creating new products and experiences by integrating AI into software applications as well as analytics and secondly improving internal processes and workflows by implementing AI enabled improvements to raise efficiencies and reduce complexity. This has already been evident in company results over the last quarters, as software companies with high-demand new AI-products pulled ahead of peers that lacked a strong product roadmap.

Microsoft with its Copilots is a prime example of leveraging AI tools for knowledge workers. Its GitHub Copilot, which was introduced a year ago, is boasting almost 2m paying subscribers, growing at over 100% annually. Even more, enterprises can now build their own customized Copilots leveraging their own internal data. We believe there will be a significant market for AI infused software across numerous verticals, making traditional software smarter and introducing new problem-solving capabilities.

Another example of a software company that is excelling with AI-enhanced products is ServiceNow. With its new Pro Plus offering users can generate code and summaries from natural language text descriptions, increasing development speed and low-code production. There is an open field in other vertical AI applications such as in finance. LSE Group, a leading global financial data enterprise, has partnered with Microsoft to transform its data intelligence, analytics and workspace offerings with AI and analytics capabilities. The main goal is to enhance workflows and improve insights for finance professionals by offering a digital research assistant.

While there are already tangible products for enterprise users, the product and monetization story for consumer companies is still nascent with use cases such as OpenAI’s paid version of ChatGPT. An exciting emerging field is the development of GenAI search as well as AI assistants. The main benefits of these services are hyper personalized experiences leading to high customer stickiness and strong conversion of recommendations. The Apple partnership with Microsoft and OpenAI has shown that scaled LLM players with a broad and deep offering are the go-to partners for AI and model capabilities.

Conclusion: AI adoption takes time and opens investment opportunities across sectors

We expect a long runway of growth in terms of AI use cases as well as adoption of paid AI services. In our view, AI has the potential for companies to vastly improve product performance and services, raise efficiencies and reduce complexities. As a first step, enterprises and in particular cloud service providers led the investment phase to upgrade datacenters for parallel compute. This involved not only adding more GPUs but also investing in advanced networking and storage technologies. Besides semiconductors, we anticipate that attractive investment opportunities will arise with scalable infrastructure providers and companies that are able to leverage internal data to create market-leading software products and insights in the future. We identify companies that are best positioned to fully capitalize on this technology trend and have over one third of our fund positioned towards those leading innovators.

We are already seeing a winner-takes-all scenario or at least the dominance within the AI tech-stack which makes stock-picking even more important. Further, we see global equity markets and especially the US present a fertile hunting ground for attractive investment opportunities across the AI value chain. However, being more than year into the investment phase, there are more critical voices on return on investment spent. Assessing which companies see strong product and revenue velocity becomes even more important. This raises risks in parts of the AI investment space that are already overheated from a share price and valuation perspective.Nevertheless we find attractive opportunities across various sector and see AI as one of the most interesting structural trends to be invested in.

Case study of AI beneficiaries

ASML – Semiconductor

ASML is the leading lithography equipment company with a quasi-monopoly in leading edge chips. The company should benefit from AI, driven by a shift to smaller nodes such as 2nm for AI-related chips as well as higher lithography intensity from High-Bandwidth-Memory.

Marvell – Semiconductor

Marvell is a US-based data infrastructure technology company with leading market positions in networking and in the development of custom chips. Marvell’s signal processors facilitate fast and reliable data transmission and benefit from increasing speed requirements in data centres. The company also provides deep expertise in the development of optimized silicon and has projects with all major US cloud providers in place.

Microsoft – Software

Microsoft has probably the leading AI footprint in global software. Microsoft’s AI assets range from accelerated computing with Azure, large language models like the one from OpenAI and Microsoft Fabric, an AI platform for developers, to work productivity tools such as Copilot.

ServiceNow – Software

ServiceNow is a cloud-based platform designed to streamline and automate business workflows. The company acts as a “platform of platforms”, which seamlessly integrates with various applications in a low-code environment. With Now Assist, the company leverages AI to provide summarization tasks, text-to-code, text-to-workflow and error checking.

Datadog – Software

Datadog provides a platform to monitor the performance of cloud-based workloads. Its tools enable enterprises a comprehensive view at different servers, applications, and cloud-based software in one spot. The company provides one of the broadest product offerings in AI-based observability tools, spanning Watchdog (AI-based detection of irregularities), LLM Observability (provides a centralised view of all AI models) and BitsAI, Datadog’s AI Copilot.

Amazon – Cloud computing and E-commerce

Amazon is the global market leader in cloud computing. The company provides significant scale-related cost advantages, a wide range of AI services, a proprietary hardware stack (Graviton, Trainium, Inferentia) and is currently focused on a 3-layered AI approach, which includes compute, access to various third party LLMs via Bedrock and applications that get created on top of the LLM, for example Amazon’s coding companion.

Meta Platforms – Social Media

Meta offers leading consumer applications such as Instagram, Facebook and WhatsApp. It has one of the largest GPU footprints globally and was one of the first adopters of AI to enhance its recommendation, ad tooling and tracking algorithms. Meta developed the most successful open source LLM Llama3 and is currently investing in an AI assistant via Meta AI.

LSE Group – Financial Data

LSE Group is a leading global financial data enterprise as well as the owner of Russell Indices and the London Stock Exchange. LSE has a partnership with Microsoft to transform its data intelligence, analytics and workspace offerings with AI and analytics capabilities to enhance workflows and results for finance professionals.

Authors

Martin Hermann

Martin Hermann has been a Portfolio Manager at Berenberg since October 2017. He is responsible for the Berenberg Global Focus Fund and the global wealth management strategy “Equity Growth”, and serves as co-portfolio manager of the Berenberg European Focus Fund. Prior to joining Berenberg, he was a Portfolio Manager within Allianz Global Investors’ award-winning Europe Equity Growth Team and deputy portfolio manager of the International Equity Growth Fund. He began his career in 2010 as an investment trainee in the Graduate Programme at Allianz Global Investors. Martin Hermann holds a Master’s degree in Investment Analysis and Corporate Finance from the University of Vienna and is a CFA charterholder.

Tim Gottschalk

Tim Gottschalk has been a Portfolio Manager at Berenberg since January 2022. He started his career in the Berenberg International Graduate Program with assignments in Asset Management, Wealth Management and Equity Research. Tim Gottschalk holds a Bachelor of Science in Business Administration and a Master of Science in Finance with Distinction from the University of Cologne with stays abroad in Dublin and Stockholm.